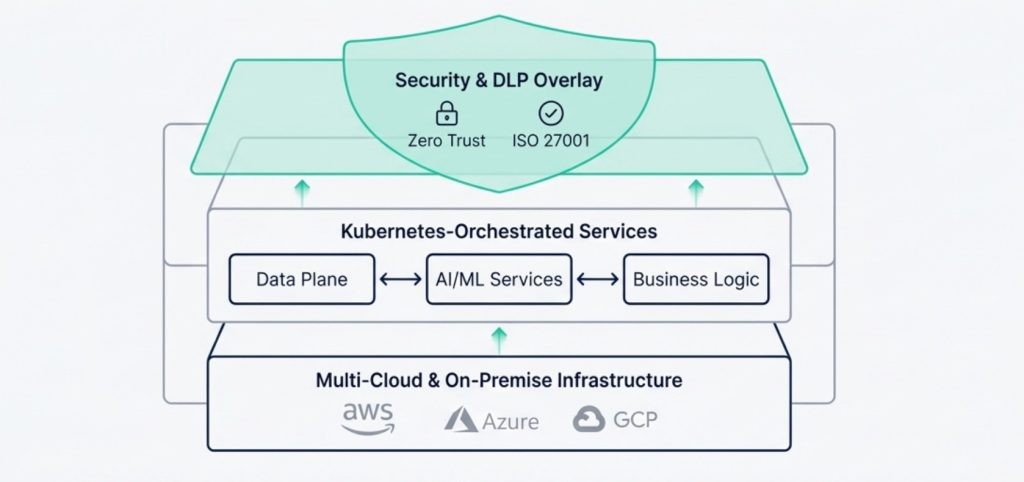

AIBI-Studio is built on a true cloud-native, multi-tenant, horizontally scalable architecture that grows from 100 predictions per day to 100 million without changing a single line of your code.

Deployments can start on our shared high-performance tenant and seamlessly graduate to dedicated VPCs or on-premise/air-gapped clusters when needed. Inference is serverless and pay-per-use, IoT ingestion handles millions of events per second (proven with Smart24x7), and dashboards are served globally via CDN with sub-200 ms latency.

Main Modules Implemented

- Multi-cloud & On-Premise Kubernetes Orchestration

- Serverless Prediction Endpoints (pay-per-use)

- 10M+ events/second IoT Ingestion Engine

- Auto-scaling MLOps & Canary Deployments

- Global Low-Latency Dashboard CDN

- 99.99% Uptime SLA

Detailed Implementation & Outcomes Start small, scale infinitely:

- Pilot stage: Shared tenant, <$500/month

- Growth stage: Dedicated VPC, auto-scaling clusters

- Enterprise stage: Private cloud or on-premise with full air-gap option